This is the second in a series of posts discussing about VMware vSphere Availability features, how do they work and what are their requirements.

Please keep in mind, the intended audience for this posts are Sysadmins that want to know a little more about vSphere. If you have been working with VMware products for a while you might find this post kind of boring.

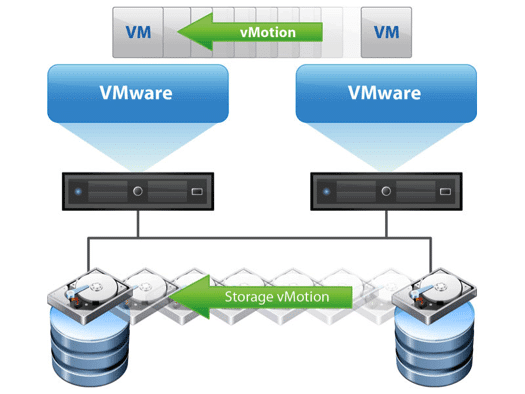

In my last post of the series I spoke about vSphere vMotion but that’s not the only way of migrating VMs arround in VMware environments.

I would like to discuss a few of them.

Storage vMotion

Storage vMotion was introduced in VMware Infrastructure (ESXi 3.5) and it is a feature that allows administrators to move Virtual Machines across different datastores without powering off a virtual machine.

Some of it’s key features are:

– Workload migration across different physical LUN’s.

– IOPS load balancing (With sDRS Datastore clusters).

– Storage Capactiy Management.

– The ability to perform maintenance on phyisical storage without downtime.

How Does it work?

Before I explain that, please take a look at this post regarding what files compose a virtual machine.

The process is the following

- The user selects a VM to be migrated and a destination datastore. (Thus defining source and destination datastores and files to be migrated).

- VM folder is copied to the destination datastore. (That includes all VM files but VMDK’s)

- Once all files are copied a “shadow VM” is started at the destination DS and it’s idled waiting for VM disk file(s) to finish copying.

- During the disk file transfer all changes to the files are tracked with changed block tracking (CBT).

- svMotion iteratively repeats the CBT process during data transfer.

- Once the ammount of outstanding blocks is small enough, svMotion invokes a FSR (Fast Suspend and Resume) similar to the stun on vMotion. To transfer the running VM to the idle “shadow VM”

- After FSR finishes files on the source datastore are deleted and space is reclaimed.

Considerations:

- Storage vMotion can be used to rename VM folders and files on datastores.

- It can also be used to change VM disk provisioning.

- Before the svMotion process is initiated a target datastore capacity check is done, so you can’t migrate a VM in a full datastore.

- svMotion is “storage agnostic” meaning it can work with anything that can provide a VMware datastore. (iSCSI, FCoE, vSAN, NFS, NAS, etc)

- svMotion might cause issues on IO intensive applications such as databases. Please keep that in mind.

- The max concurrent svMotion migrations is 16, nevertheless doing this can cause performance degradation on the storage array.

- While vMotion requires a dedicated vmkernel TCP/IP stack for traffic. Storage vMotion migrates data two ways: over FC switches in case you are using Fiber Channel or using Management or Provissioning interfaces.

Requirements and limitations:

- You need vCenter to do a storage vMotion

- ESXi host in which the VM is running must have access to source and destination datastores.

- ESXi on which the virtual machine is running must have at least Standard licenses.

- Storage vMotion during VMware tools installation is not supported.

- VM disks must be in persistent mode. If you are using RDM’s you can migrate the mapping file if it is phyiscal or virutal (if it is virtual you can change it’s provisioning) but not the data.